Duy H. M. Nguyen

Nguyen Ho Minh Duy

Universitätsstraße 32

70569 Stuttgart, Germany

Room: 2.321

I am currently a Ph.D. Candidate under the supervision of Prof. Mathias Niepert at Max Planck Research School for Intelligent Systems (IMPRS-IS) and University of Stuttgart. I have also been a Researcher at the German Research Center for Artificial Intelligence (DFKI) since 2021.

My topics of interest are

- Hybrid Discrete-Continuous Learning (differentiable relaxations for discrete intermediate representations)

- Scalable Algorithms for Multi-modal Learning with applications for Healthcare, Simulation Science

- Efficient Deep Learning (model compression, accelerated training/inference, etc.)

Please visit my Google Scholar for a full list of publications and GitHub for source codes.

news

| Dec 04, 2025 | 🔔 Our new work, Dual-Frequency-Aware Learning for High-Fidelity Extremely Sparse-View CBCT Reconstruction, has been accepted (with minor revision) to Transactions on Machine Learning Research (TMLR) 2025. Check it out (here)! |

|---|---|

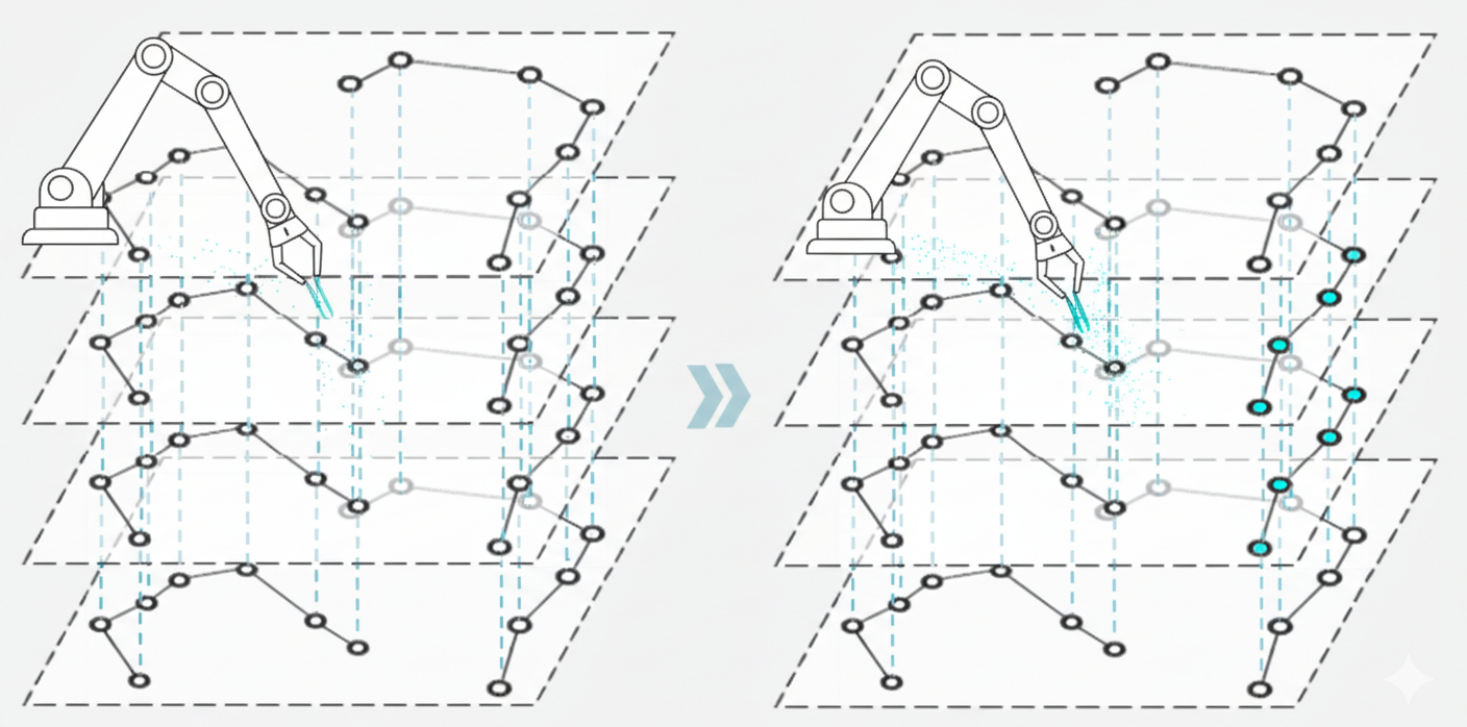

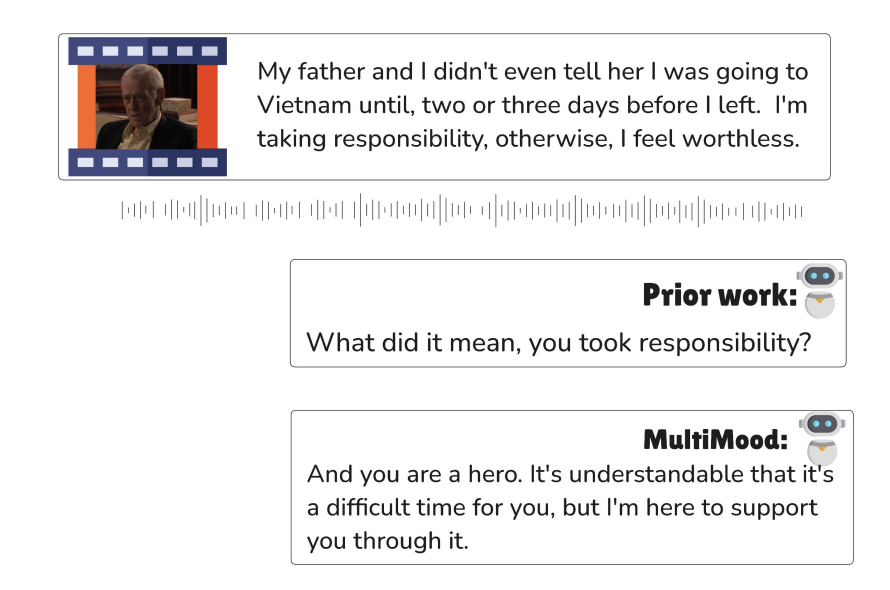

| Nov 08, 2025 | 🔔 Exciting News! We’re thrilled to share that our two recent works have been accepted to AAAI 2026 — one as an oral and the other as a poster presentation! 🎉 i. Multi-Mood — a multi-modal large language model that integrates video, audio, and text with psychological criteria through reinforcement learning to enable trustworthy and emotionally aligned responses. ii. LIBERO-Mem — a non-Markovian task suite for short- and long-horizon object tracking and manipulation, featuring temporally sequenced subgoals that challenge models to reason beyond the current observation. 📄 Codes will be released soon 🎉 — stay tuned! |

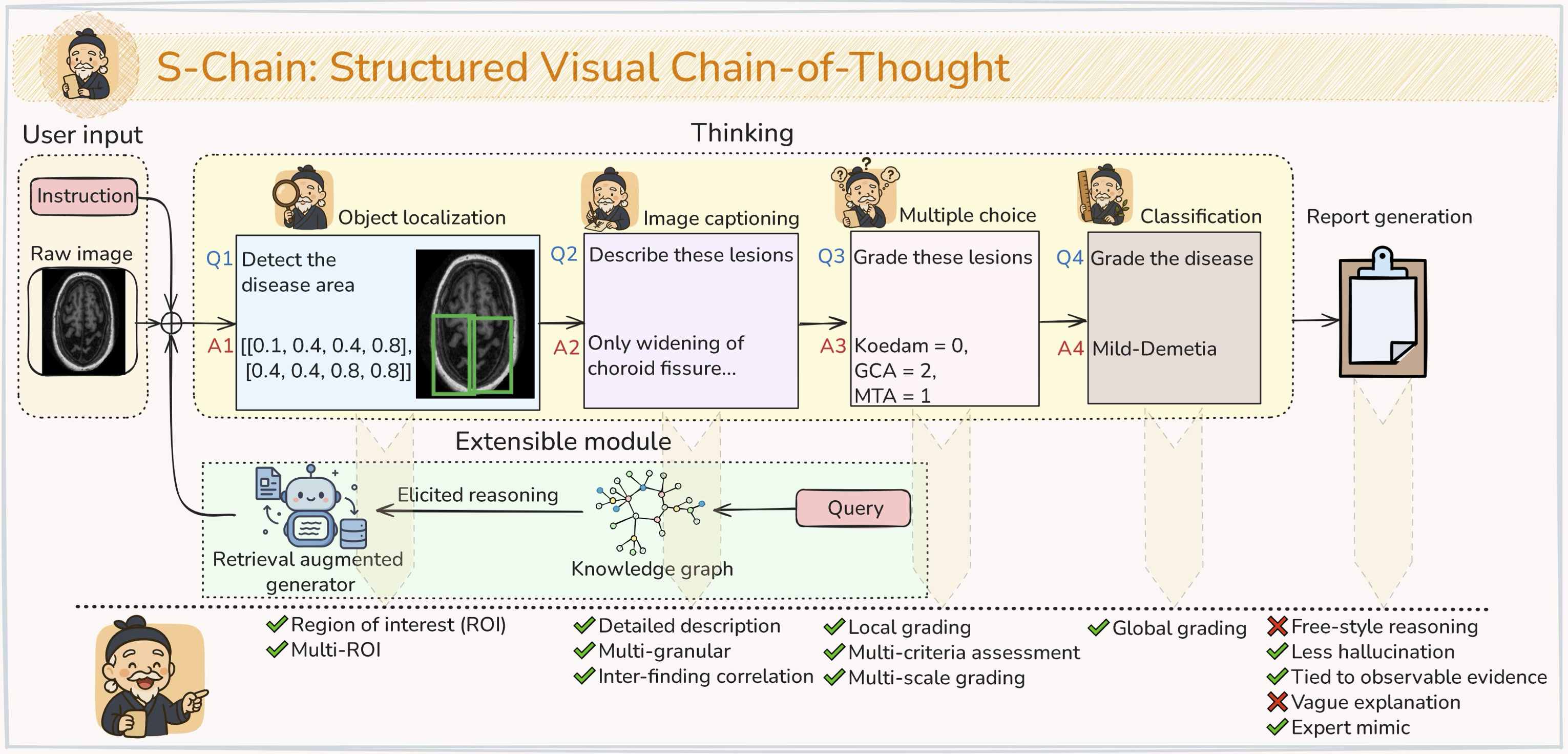

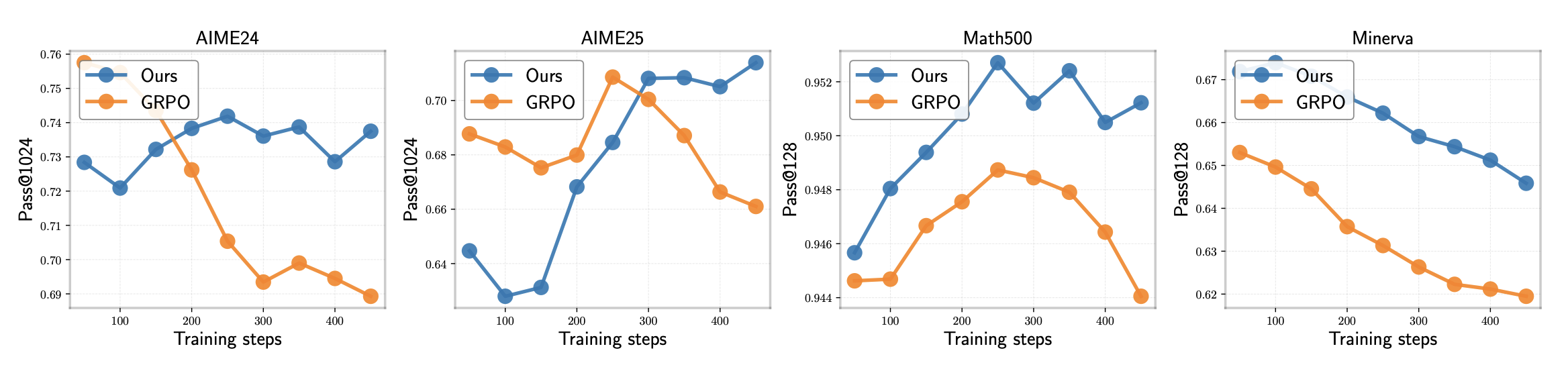

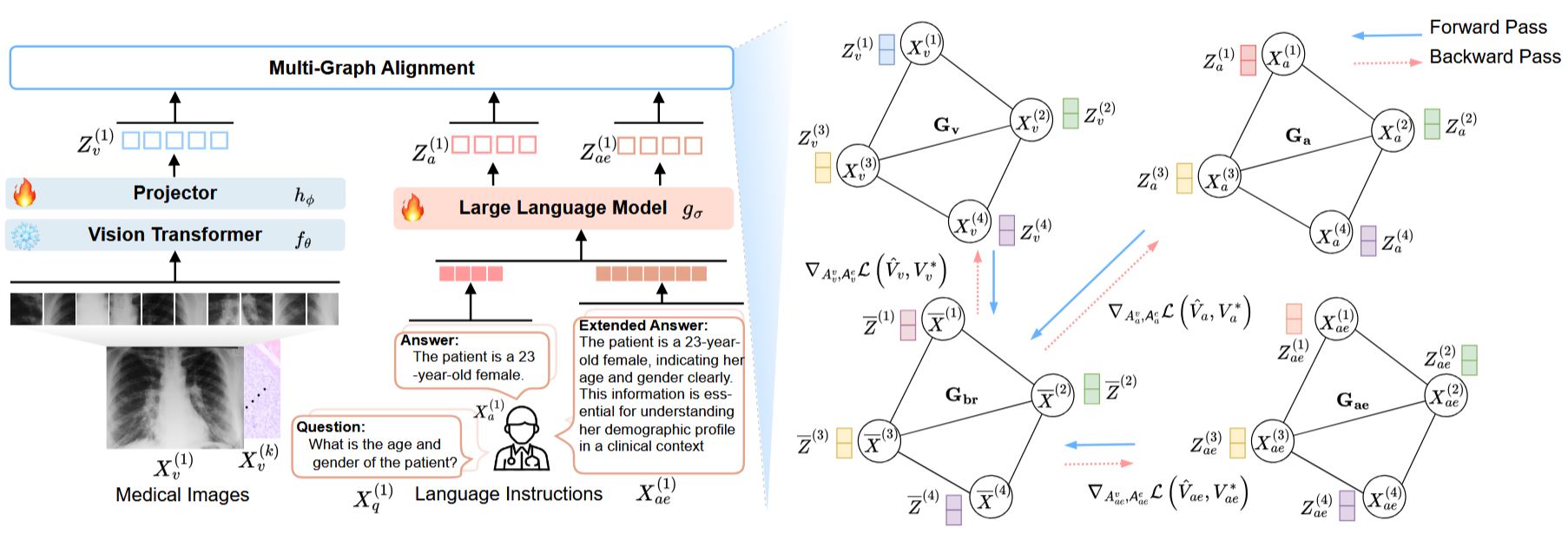

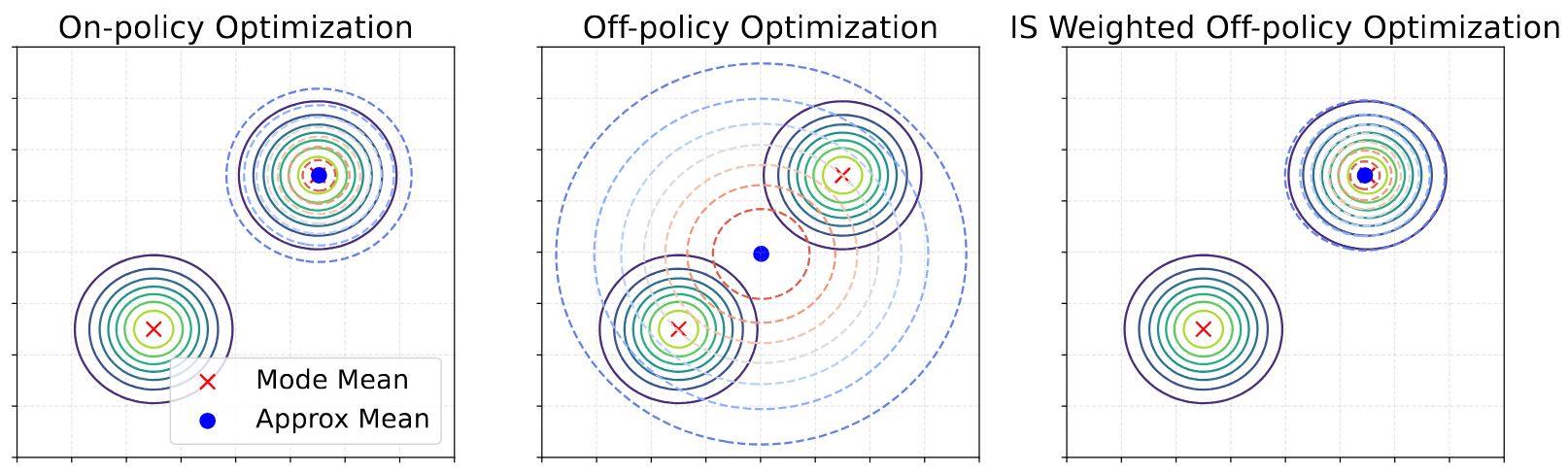

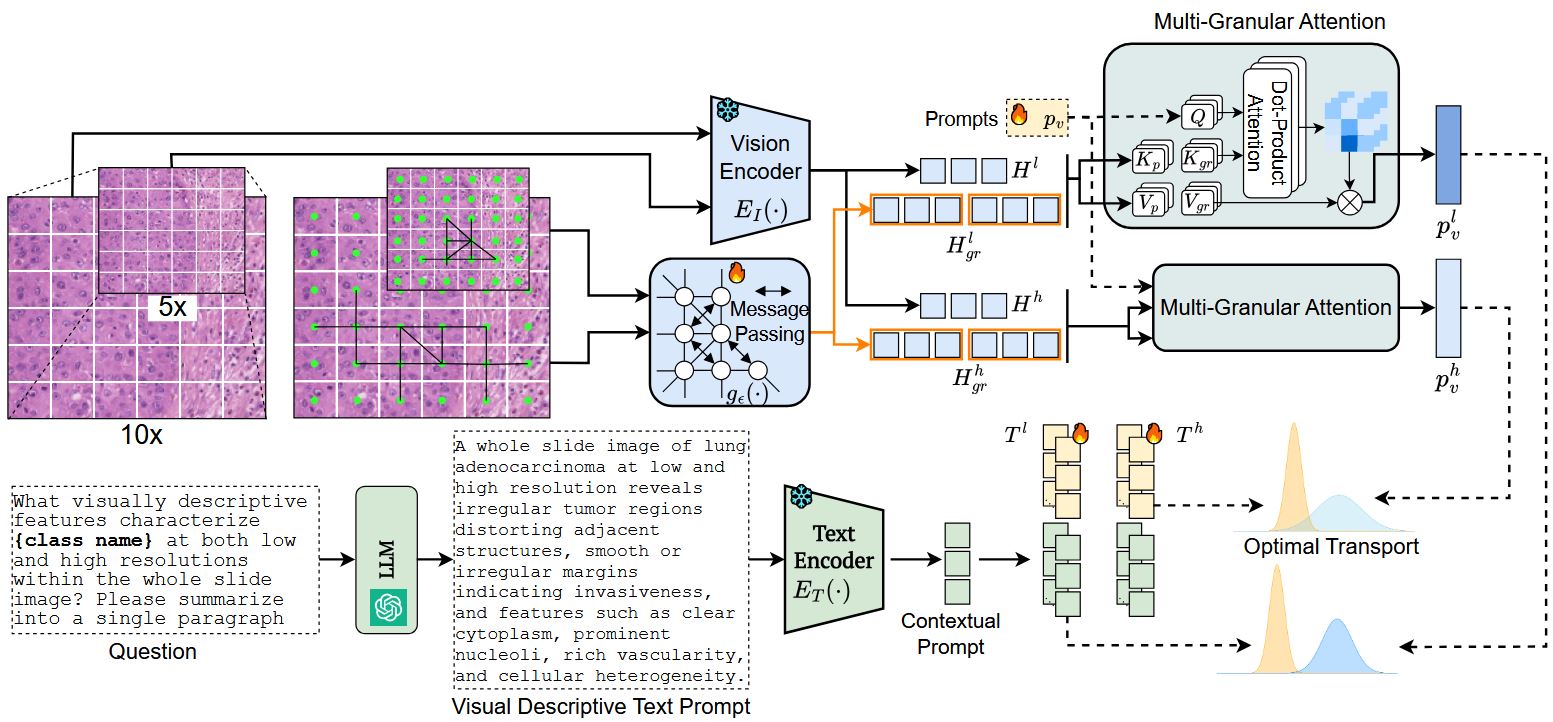

| Sep 26, 2025 | 🔔 Excited to share that our works on (i) ExGra-Med — a data-efficient multimodal large language model (LLM) for healthcare; (ii) Token Redundancy in 3D Point Cloud Transformers — uncovering how existing 3D transformers (e.g., Ptv-3, Sonata) are over-tokenized, and proposing an efficient token merging strategy that reduces computation by up to 90-95% while preserving accuracy; and (iii) Over-Optimization in RLHF for LLM Post-Training — exploring how reinforcement learning from human feedback can lead to alignment instability and proposing new insights into optimization LLM post-training have been accepted to NeurIPS 2025 🎉. Excited to present and discuss them at San Diego 🚀 |

| Sep 09, 2025 | 🌟 Excited to give a talk about my current research on Scaling Multi-Modal Learning: Hybrid Representations and Efficient Adaptation at Machine Learning Lab, School of Information and Communications Technology (SOICT), Hanoi University of Science and Technology, Vietnam and (ii) School of Computing, National University of Singapore (NUS). |

| Sep 02, 2025 | |

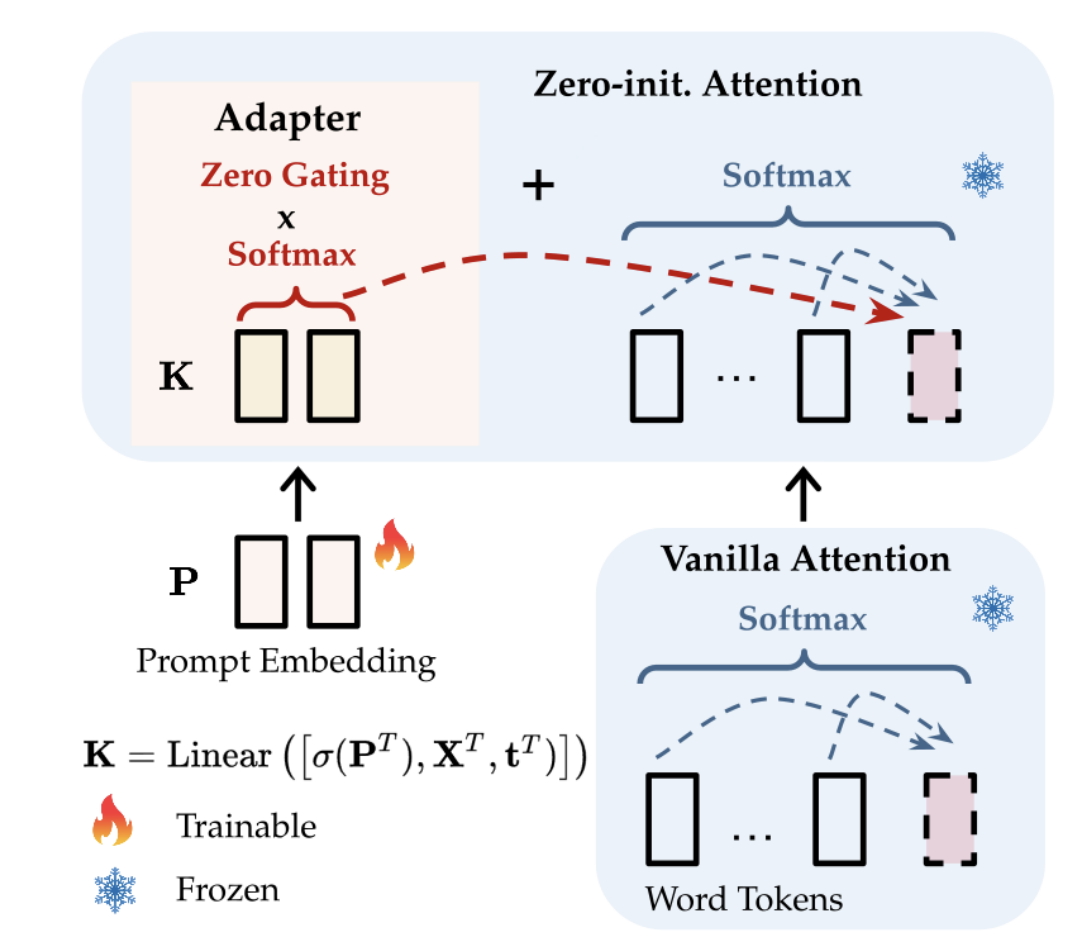

| May 01, 2025 | 🎉 Our first (i) preliminary version, MGPath has been accepted to the Workshop on Foundation Models in the Wild, ICLR 2025 and (ii) another one about LLaMA-Adapter’s prompt learning is accepted at ICML 2025. |

| Apr 20, 2025 | 🎉 Our work in building a new Inductive Message Passing Network for Efficient Human-in-the-Loop Annotation of Mobile Eye Tracking Data has been accepted at Scientific Report, Nature Portfolio. |

| Feb 20, 2025 | |

| Oct 08, 2024 | 🇨🇭 Start my visiting research at ETH AI Center, ETH Zurich. The topics are about Multi-Modal LLMs for Healthcare empowered by Retrieval-Augmented Generation. |

| Oct 07, 2024 | |

| Oct 06, 2024 | |

| Jun 10, 2024 | |

| May 01, 2024 | |

| Jan 15, 2024 | |

| Sep 22, 2023 | |

preprints

- Under Review

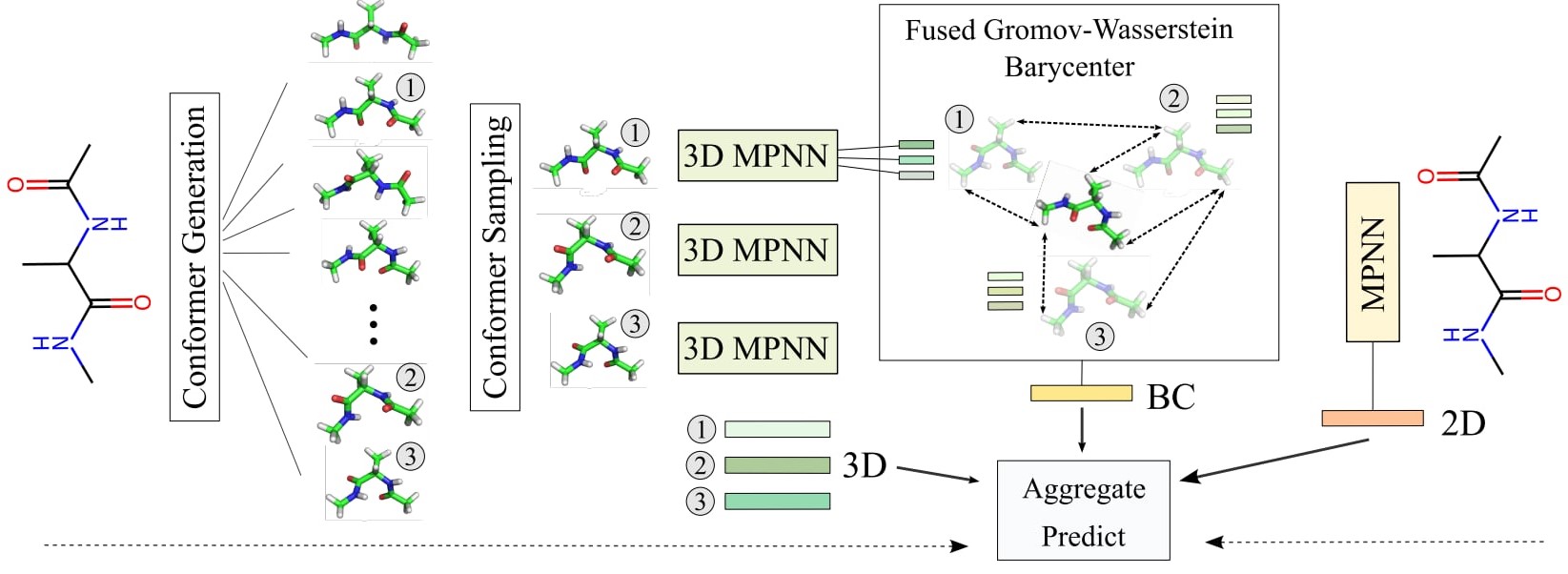

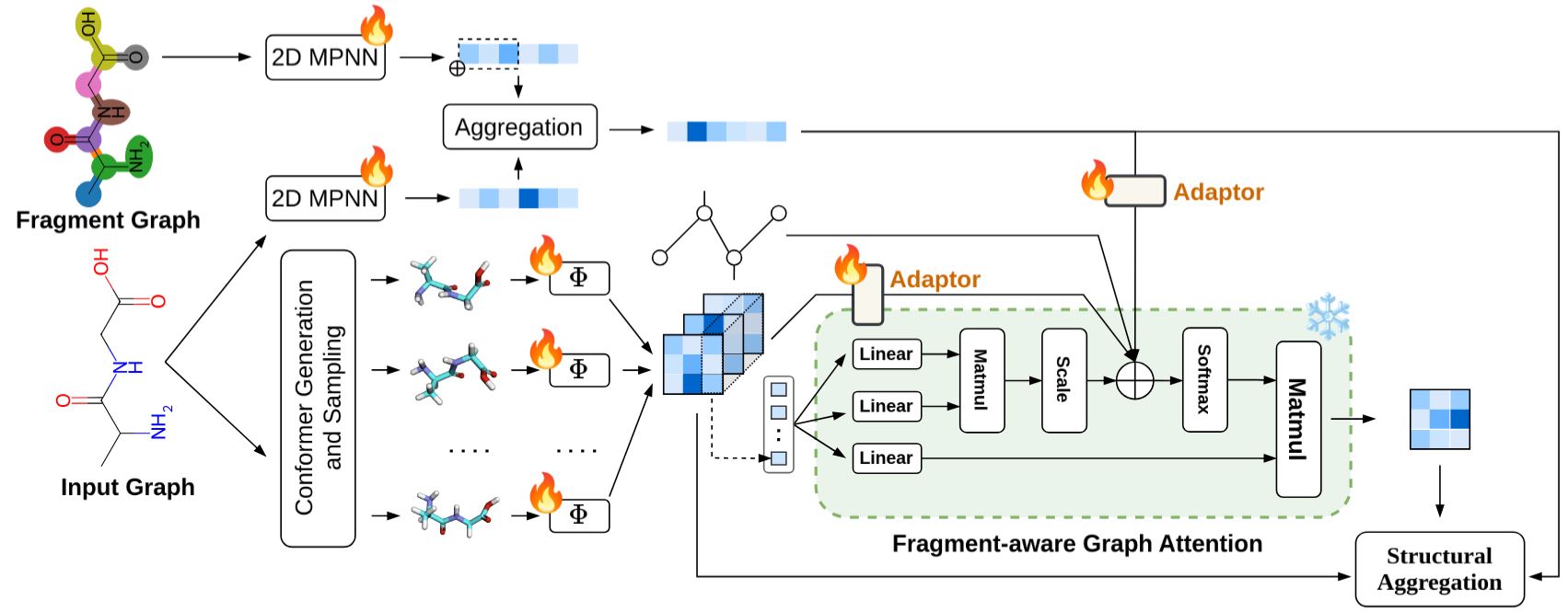

From Fragments to Geometry: A Unified Graph Transformer for Molecular Representation from Conformer Ensembles2025

From Fragments to Geometry: A Unified Graph Transformer for Molecular Representation from Conformer Ensembles2025

selected publications

- AAAI (Oral)

Reinforce Trustworthiness in Multimodal Emotional Support SystemProceedings of the AAAI Conference on Artificial Intelligence, 2026

Reinforce Trustworthiness in Multimodal Emotional Support SystemProceedings of the AAAI Conference on Artificial Intelligence, 2026 - ICLR (Oral)

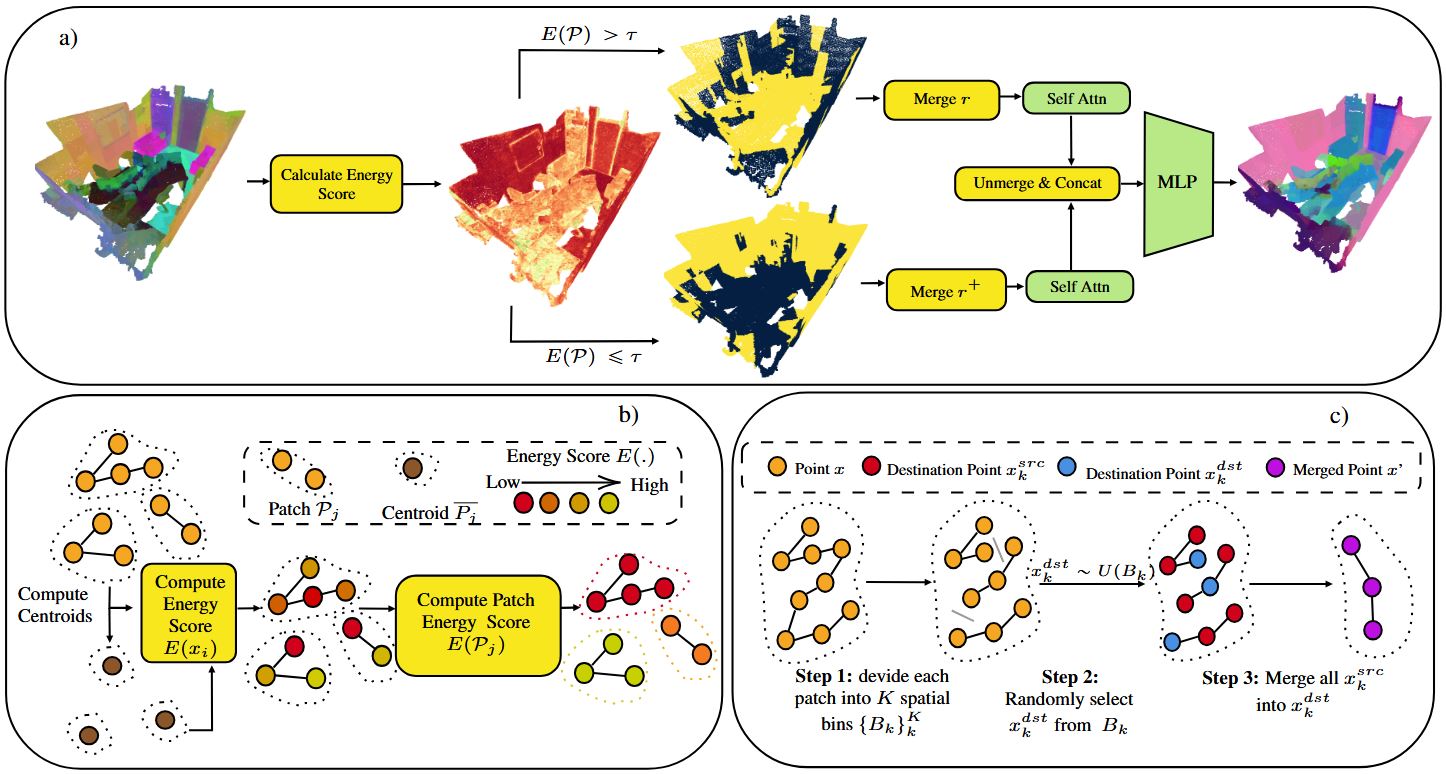

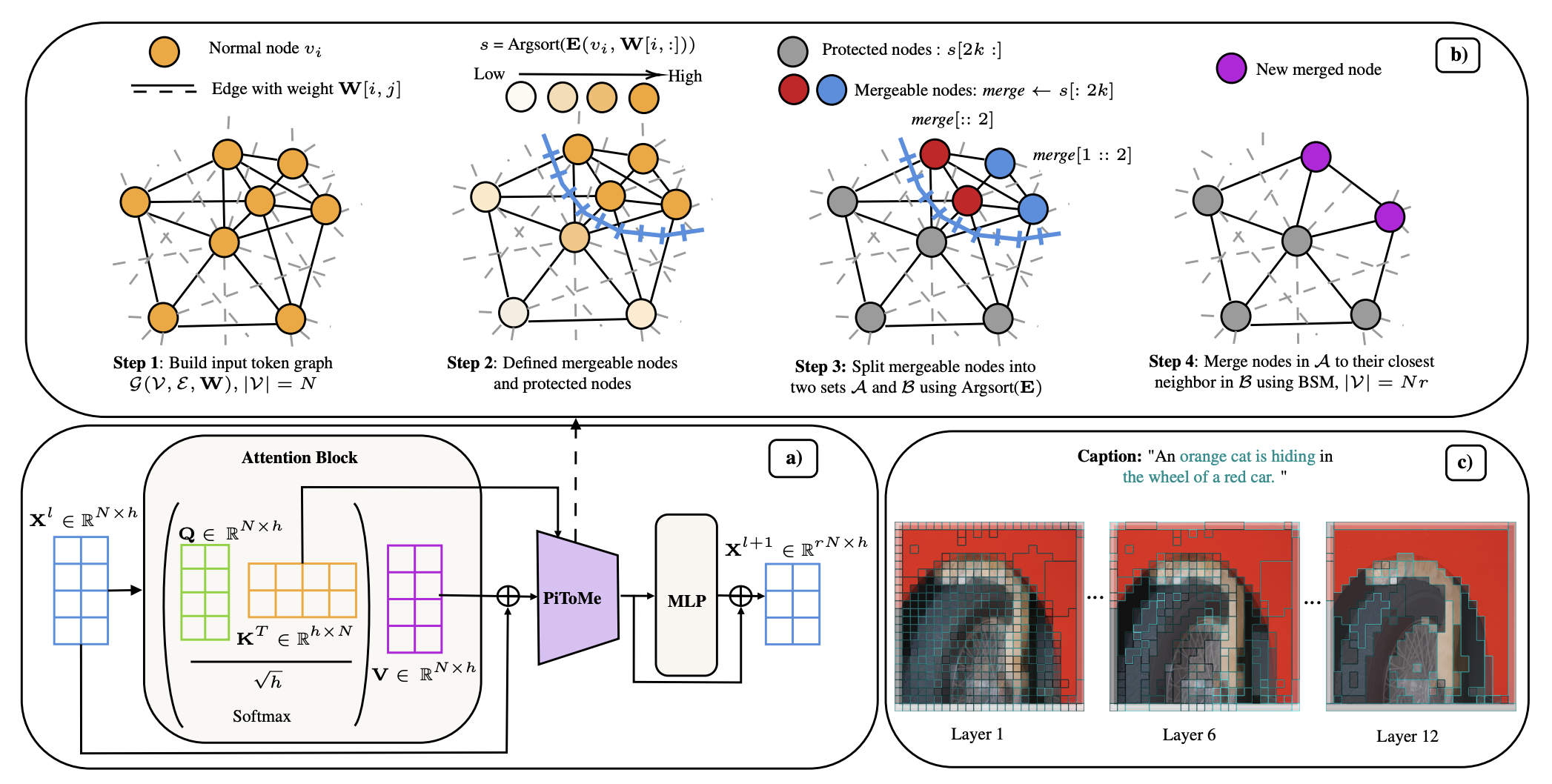

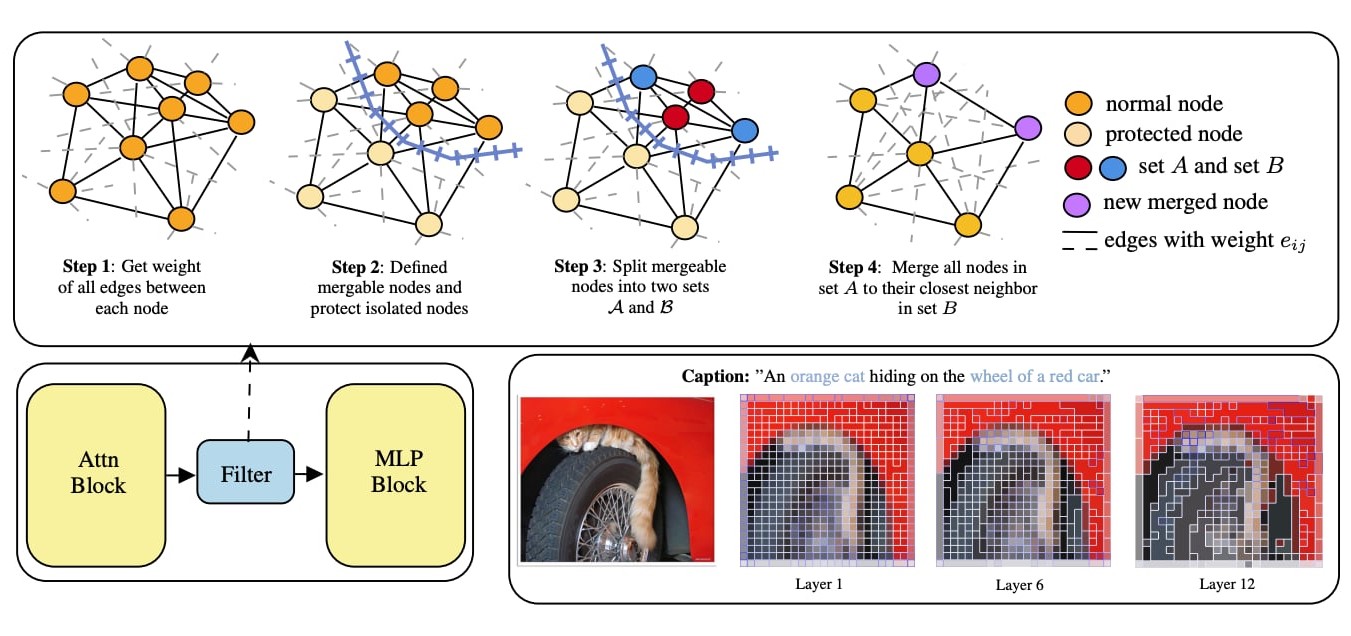

Energy minimizing-based token merging for accelerating Transformers5th Workshop on practical ML for limited/low resource settings, International Conference on Learning Representations (ICLR), 2024

Energy minimizing-based token merging for accelerating Transformers5th Workshop on practical ML for limited/low resource settings, International Conference on Learning Representations (ICLR), 2024 - CVPR

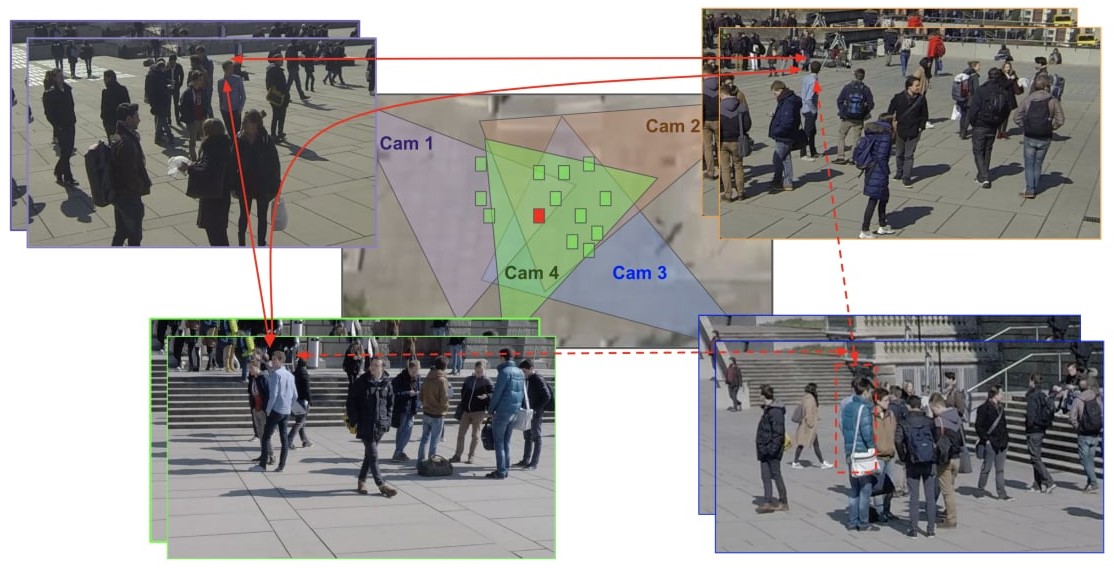

LMGP: Lifted multicut meets geometry projections for multi-camera multi-object trackingProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022

LMGP: Lifted multicut meets geometry projections for multi-camera multi-object trackingProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022